In the previous post I introduced to you the first version of adaptive offscreen particles. I also mentioned there a number of drawbacks. In the new version of AOP, which I want to talk about further, I tried to get rid of these disadvantages.

Motivation

In GPU Gems's Offscreen particles article was talked about mixed-resolution rendering. This approach is well suited to effects which have low resolution textures, such as smoke. But, what if we want to keep the details, such as sparks, fire, stones, and at the same time get a profit from mixed-resolution rendering? The answer is color contrast filter with overdraw prediction.

Prediction

I used green color for buildings to hide content details.

Lower resolution particles rendering

Offscreen particles can be rendered in 1/4, 1/16, or smaller screen size.

The scene color buffer and the offscreen buffers should have the same bitness in order to insure the similar result after alpha blending. I would recommend A16B16G16R16F format.

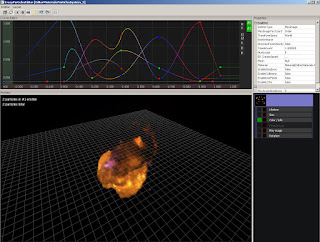

Figure 1. Offscreen particles accumulated in a separate render target.

Depth preparing

For correct offscreen particle rendering we have to downscale the original depth buffer getting maximum depths.

Figure 2. Depth downsampling stages.

Full resolution detail particles rendering and edges fixing

Of course detail of offscreen particles on some places doesn't suite as. Therefore these places should be found with the color contrast filter and the depth detection filter and finally replaces by fullscreen particles.

Building the stencil mask

In performance reason the stencil mask should be used to separate the offscreen final apply and the fullscreen particles pass.

Color contrast filter

According to its name this filter searches the contrast in color. If the contrast high enough the detail is required. This filter is pretty cool thing because it depends on information on the screen. It means if camera is closely to particles less details will be found which leads to better performance.

Figure 3. Color contrast filter working result.

B - max component value of neighbors colors

C - contrast, 15 by default

should_be_detailed = abs( B - A ) > A / C;

Edge detection filter

In addition this filter should be used to cover edges. My filter based on depth discontinues.

Figure 4. Edge detection filter working result.

Combining

Based on different values from stencil mask, the offscreen particles are blended and the detailing particles are rendered into the scene buffer. How to apply offscreen particles into scene color buffer I wrote in the previous article. (I used green color for buildings to hide content details).

Figure 6. Applying low resolution offscreen particles into scene color buffer according to stencil mask.

Figure 7. Rendering detail particles into scene color buffer according to stencil mask.

Figure 8. Final result.

As a result we saved details and got great performance boost! Particles with optimization rendered 2-3 times faster than without it in this particular example.

(article is not finished and will be updated)